research

My research interests lie at the intersection of deep learning, computer vision, and robotics—particularly in the areas of (multimodal) representation learning, self-supervised learning, open-endedness, and agents.

Publications

2025

- arXiv

Cambrian-S: Towards Spatial Supersensing in VideoShusheng Yang*, Jihan Yang*, Pinzhi Huang†, Ellis Brown† , Zihao Yang, Yue Yu, Shengbang Tong, Zihan Zheng, Yifan Xu, Muhan Wang, Danhao Lu, Rob Fergus, Yann LeCun, Li Fei-Fei, and Saining XiearXiv preprint arXiv:2511.04670, 2025

Cambrian-S: Towards Spatial Supersensing in VideoShusheng Yang*, Jihan Yang*, Pinzhi Huang†, Ellis Brown† , Zihao Yang, Yue Yu, Shengbang Tong, Zihan Zheng, Yifan Xu, Muhan Wang, Danhao Lu, Rob Fergus, Yann LeCun, Li Fei-Fei, and Saining XiearXiv preprint arXiv:2511.04670, 2025We argue that progress in true multimodal intelligence calls for a shift from reactive, task-driven systems and brute-force long context towards a broader paradigm of supersensing. We frame spatial supersensing as four stages beyond linguistic-only understanding: semantic perception (naming what is seen), streaming event cognition (maintaining memory across continuous experiences), implicit 3D spatial cognition (inferring the world behind pixels), and predictive world modeling (creating internal models that filter and organize information). Current benchmarks largely test only the early stages, offering narrow coverage of spatial cognition and rarely challenging models in ways that require true world modeling. To drive progress in spatial supersensing, we present VSI-SUPER, a two-part benchmark: VSR (long-horizon visual spatial recall) and VSC (continual visual spatial counting). These tasks require arbitrarily long video inputs yet are resistant to brute-force context expansion. We then test data scaling limits by curating VSI-590K and training Cambrian-S, achieving +30% absolute improvement on VSI-Bench without sacrificing general capabilities. Yet performance on VSI-SUPER remains limited, indicating that scale alone is insufficient for spatial supersensing. We propose predictive sensing as a path forward, presenting a proof-of-concept in which a self-supervised next-latent-frame predictor leverages surprise (prediction error) to drive memory and event segmentation. On VSI-SUPER, this approach substantially outperforms leading proprietary baselines, showing that spatial supersensing requires models that not only see but also anticipate, select, and organize experience.

@article{yang2025cambrian-s, author = {Yang, Shusheng and Yang, Jihan and Huang, Pinzhi and Brown, Ellis and Yang, Zihao and Yu, Yue and Tong, Shengbang and Zheng, Zihan and Xu, Yifan and Wang, Muhan and Lu, Danhao and Fergus, Rob and LeCun, Yann and Fei-Fei, Li and Xie, Saining}, title = {{Cambrian-S: Towards Spatial Supersensing in Video}}, journal = {arXiv preprint arXiv:2511.04670}, year = {2025}, } - arXiv

Benchmark Designers Should “Train on the Test Set” to Expose Exploitable Non-Visual ShortcutsarXiv preprint arXiv:2511.04655, 2025

Benchmark Designers Should “Train on the Test Set” to Expose Exploitable Non-Visual ShortcutsarXiv preprint arXiv:2511.04655, 2025Robust benchmarks are crucial for evaluating Multimodal Large Language Models (MLLMs). Yet we find that models can ace many multimodal benchmarks without strong visual understanding, instead exploiting biases, linguistic priors, and superficial patterns. This is especially problematic for vision-centric benchmarks that are meant to require visual inputs. We adopt a diagnostic principle for benchmark design: if a benchmark can be gamed, it will be. Designers should therefore try to “game” their own benchmarks first, using diagnostic and debiasing procedures to systematically identify and mitigate non-visual biases. Effective diagnosis requires directly “training on the test set”—probing the released test set for its intrinsic, exploitable patterns.

We operationalize this standard with two components. First, we diagnose benchmark susceptibility using a “Test-set Stress-Test” (TsT) methodology. Our primary diagnostic tool involves fine-tuning a powerful Large Language Model via k-fold cross-validation on exclusively the non-visual, textual inputs of the test set to reveal shortcut performance and assign each sample a bias score s(x). We complement this with a lightweight Random Forest-based diagnostic operating on hand-crafted features for fast, interpretable auditing. Second, we debias benchmarks by filtering high-bias samples using an “Iterative Bias Pruning” (IBP) procedure. Applying this framework to four benchmarks—VSI-Bench, CV-Bench, MMMU, and VideoMME—we uncover pervasive non-visual biases. As a case study, we apply our full framework to create VSI-Bench-Debiased, demonstrating reduced non-visual solvability and a wider vision-blind performance gap than the original.@article{brown2025shortcuts, author = {Brown, Ellis and Yang, Jihan and Yang, Shusheng and Fergus, Rob and Xie, Saining}, title = {Benchmark Designers Should ``Train on the Test Set'' to Expose Exploitable Non-Visual Shortcuts}, journal = {arXiv preprint arXiv:2511.04655}, year = {2025}, } - arXiv

SIMS-V: Simulated Instruction-Tuning for Spatial Video UnderstandingarXiv preprint arXiv:2511.04668, 2025

SIMS-V: Simulated Instruction-Tuning for Spatial Video UnderstandingarXiv preprint arXiv:2511.04668, 2025Despite impressive high-level video comprehension, multimodal language models struggle with spatial reasoning across time and space. While current spatial training approaches rely on real-world video data, obtaining diverse footage with precise spatial annotations remains a bottleneck. To alleviate this bottleneck, we present SIMS-V—a systematic data-generation framework that leverages the privileged information of 3D simulators to create spatially-rich video training data for multimodal language models. Using this framework, we investigate which properties of simulated data drive effective real-world transfer through systematic ablations of question types, mixes, and scales. We identify a minimal set of three question categories (metric measurement, perspective-dependent reasoning, and temporal tracking) that prove most effective for developing transferable spatial intelligence, outperforming comprehensive coverage despite using fewer question types. These insights enable highly efficient training: our 7B-parameter video LLM fine-tuned on just 25K simulated examples outperforms the larger 72B baseline and achieves competitive performance with proprietary models on rigorous real-world spatial reasoning benchmarks. Our approach demonstrates robust generalization, maintaining performance on general video understanding while showing substantial improvements on embodied and real-world spatial tasks.

@article{brown2025simsv, title = {{SIMS-V}: Simulated Instruction-Tuning for Spatial Video Understanding}, author = {Brown, Ellis and Ray, Arijit and Krishna, Ranjay and Girshick, Ross and Fergus, Rob and Xie, Saining}, journal = {arXiv preprint arXiv:2511.04668}, year = {2025}, } - COLM

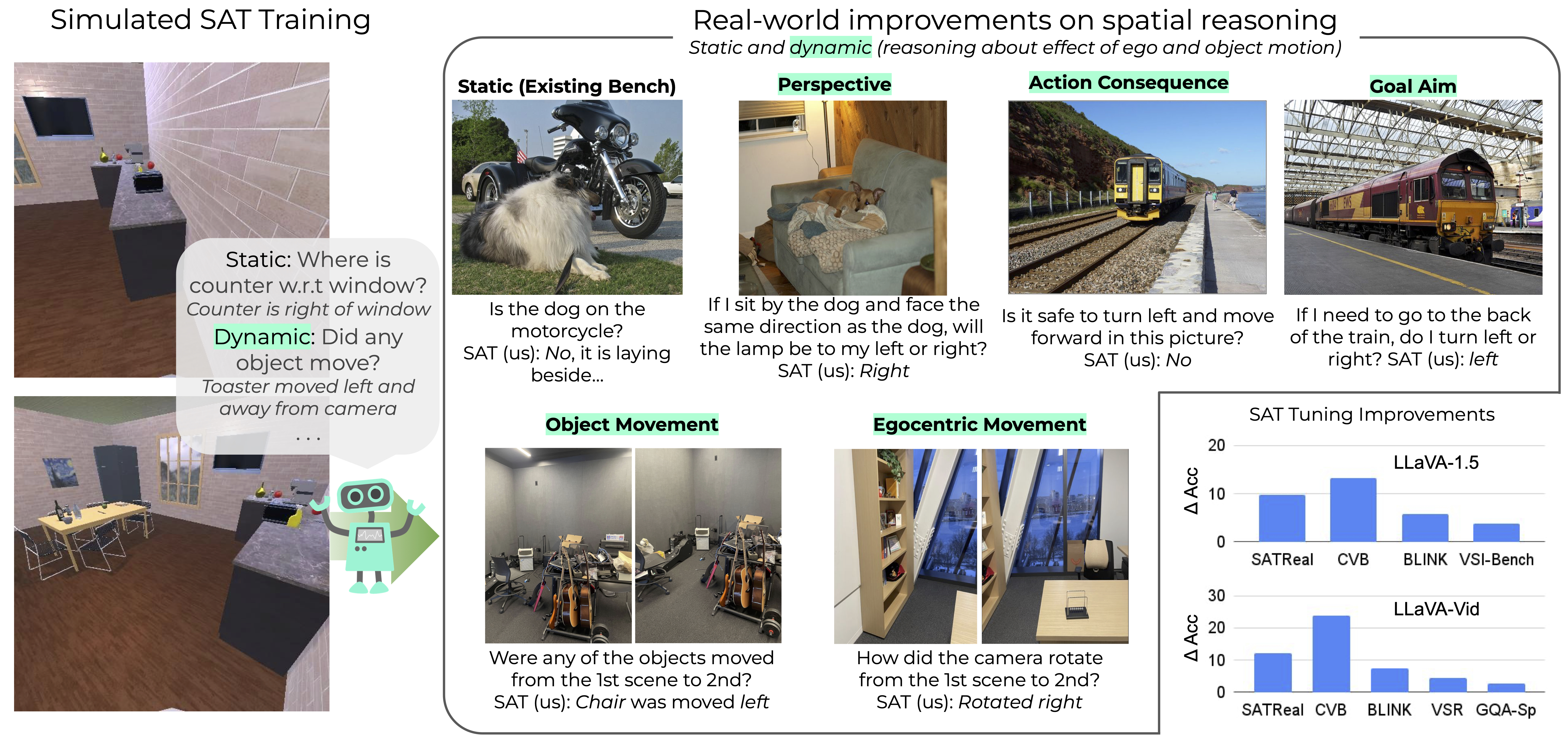

SAT: Dynamic Spatial Aptitude Training for Multimodal Language ModelsArijit Ray, Jiafei Duan†, Ellis Brown†, Reuben Tan, Dina Bashkirova, Rose Hendrix, Kiana Ehsani, Aniruddha Kembhavi, Bryan A. Plummer, Ranjay Krishna, Kuo-Hao Zeng, and Kate SaenkoIn COLM, 2025

SAT: Dynamic Spatial Aptitude Training for Multimodal Language ModelsArijit Ray, Jiafei Duan†, Ellis Brown†, Reuben Tan, Dina Bashkirova, Rose Hendrix, Kiana Ehsani, Aniruddha Kembhavi, Bryan A. Plummer, Ranjay Krishna, Kuo-Hao Zeng, and Kate SaenkoIn COLM, 2025Reasoning about motion and space is a fundamental cognitive capability that is required by multiple real-world applications. While many studies highlight that large multimodal language models (MLMs) struggle to reason about space, they only focus on static spatial relationships, and not dynamic awareness of motion and space, i.e., reasoning about the effect of egocentric and object motions on spatial relationships. Manually annotating such object and camera movements is expensive. Hence, we introduce SAT, a simulated spatial aptitude training dataset comprising both static and dynamic spatial reasoning across 175K question-answer (QA) pairs and 20K scenes. Complementing this, we also construct a small (150 image-QAs) yet challenging dynamic spatial test set using real-world images. Leveraging our SAT datasets and 6 existing static spatial benchmarks, we systematically investigate what improves both static and dynamic spatial awareness. Our results reveal that simulations are surprisingly effective at imparting spatial aptitude to MLMs that translate to real images. We show that perfect annotations in simulation are more effective than existing approaches of pseudo-annotating real images. For instance, SAT training improves a LLaVA-13B model by an average 11% and a LLaVA-Video-7B model by an average 8% on multiple spatial benchmarks, including our real-image dynamic test set and spatial reasoning on long videos – even outperforming some large proprietary models. While reasoning over static relationships improves with synthetic training data, there is still considerable room for improvement for dynamic reasoning questions.

@inproceedings{ray2025sat, title = {SAT: Dynamic Spatial Aptitude Training for Multimodal Language Models}, author = {Ray, Arijit and Duan, Jiafei and Brown, Ellis and Tan, Reuben and Bashkirova, Dina and Hendrix, Rose and Ehsani, Kiana and Kembhavi, Aniruddha and Plummer, Bryan A. and Krishna, Ranjay and Zeng, Kuo-Hao and Saenko, Kate}, year = {2025}, booktitle = {COLM}, }

2024

- Cambrian-1: A Fully Open, Vision-Centric Exploration of Multimodal LLMsShengbang Tong*, Ellis Brown*, Penghao Wu*, Sanghyun Woo, Manoj Middepogu, Sai Charitha Akula, Jihan Yang , Shusheng Yang, Adithya Iyer, Xichen Pan, Ziteng Wang, Rob Fergus, Yann LeCun, and Saining XieIn NeurIPS, 2024

Selected for Oral Presentation (1.8%) at NeurIPS 2024

We introduce Cambrian-1, a family of multimodal LLMs (MLLMs) designed with a vision-centric approach. While stronger language models can enhance multimodal capabilities, the design choices for vision components are often insufficiently explored and disconnected from visual representation learning research. This gap hinders accurate sensory grounding in real-world scenarios. Our study uses LLMs and visual instruction tuning as an interface to evaluate various visual representations, offering new insights into different models and architectures—self-supervised, strongly supervised, or combinations thereof—based on experiments with over 20 vision encoders. We critically examine existing MLLM benchmarks, addressing the difficulties involved in consolidating and interpreting results from various tasks, and introduce a new vision-centric benchmark, CV-Bench. To further improve visual grounding, we propose the Spatial Vision Aggregator (SVA), a dynamic and spatially-aware connector that integrates high-resolution vision features with LLMs while reducing the number of tokens. Additionally, we discuss the curation of high-quality visual instruction-tuning data from publicly available sources, emphasizing the importance of data source balancing and distribution ratio. Collectively, Cambrian-1 not only achieves state-of-the-art performance but also serves as a comprehensive, open cookbook for instruction-tuned MLLMs. We provide model weights, code, supporting tools, datasets, and detailed instruction-tuning and evaluation recipes. We hope our release will inspire and accelerate advancements in multimodal systems and visual representation learning.

@inproceedings{tong2024cambrian, title = {{Cambrian-1: A Fully Open, Vision-Centric Exploration of Multimodal LLMs}}, author = {Tong, Shengbang and Brown, Ellis and Wu, Penghao and Woo, Sanghyun and Middepogu, Manoj and Akula, Sai Charitha and Yang, Jihan and Yang, Shusheng and Iyer, Adithya and Pan, Xichen and Wang, Ziteng and Fergus, Rob and LeCun, Yann and Xie, Saining}, year = {2024}, booktitle = {NeurIPS}, } - V-IRL: Grounding Virtual Intelligence in Real LifeJihan Yang, Runyu Ding, Ellis Brown, Xiaojuan Qi, and Saining XieIn ECCV, 2024

There is a sensory gulf between the Earth that humans inhabit and the digital realms in which modern AI agents are created. To develop AI agents that can sense, think, and act as flexibly as humans in real-world settings, it is imperative to bridge the realism gap between the digital and physical worlds. How can we embody agents in an environment as rich and diverse as the one we inhabit, without the constraints imposed by real hardware and control? Towards this end, we introduce V-IRL: a platform that enables agents to scalably interact with the real world in a virtual yet realistic environment. Our platform serves as a playground for developing agents that can accomplish various practical tasks and as a vast testbed for measuring progress in capabilities spanning perception, decision-making, and interaction with real-world data across the entire globe.

@inproceedings{yang2024virl, author = {Yang, Jihan and Ding, Runyu and Brown, Ellis and Qi, Xiaojuan and Xie, Saining}, title = {V-IRL: Grounding Virtual Intelligence in Real Life}, year = {2024}, booktitle = {ECCV} }

2023

- ThesisOnline Representation Learning on the Open WebEllis BrownCarnegie Mellon University, 2023Master’s Thesis. Committee: Deepak Pathak, Alexei Efros, and Deva Ramanan

@mastersthesis{brown2023online, author = {Brown, Ellis}, title = {Online Representation Learning on the Open Web}, school = {Carnegie Mellon University}, note = {Master's Thesis. Committee: Deepak Pathak, Alexei Efros, and Deva Ramanan}, year = {2023}, } - Your Diffusion Model is Secretly a Zero-Shot ClassifierIn ICCV, 2023

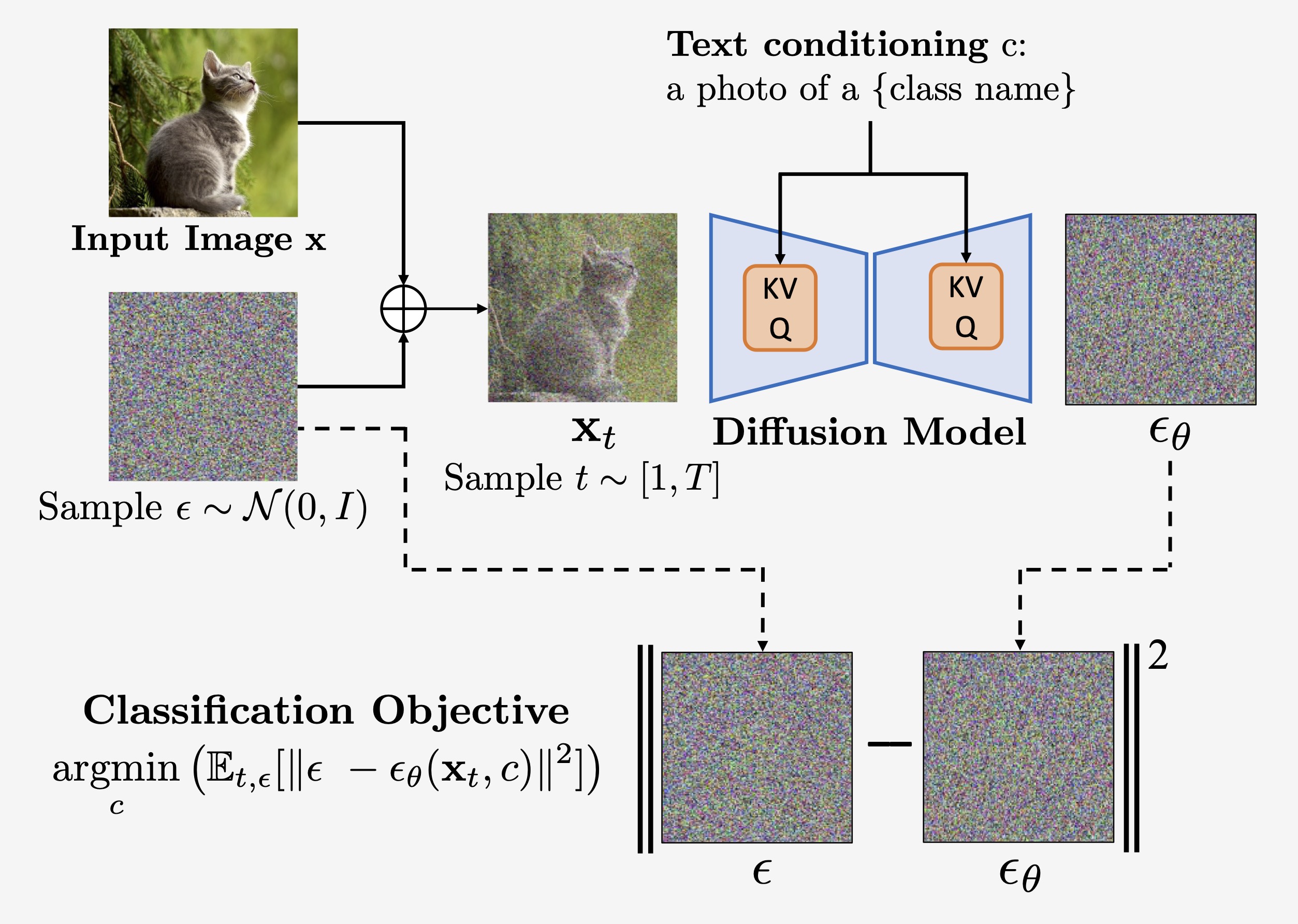

The recent wave of large-scale text-to-image diffusion models has dramatically increased our text-based image generation abilities. These models can generate realistic images for a staggering variety of prompts and exhibit impressive compositional generalization abilities. Almost all use cases thus far have solely focused on sampling; however, diffusion models can also provide conditional density estimates, which are useful for tasks beyond image generation.

In this paper, we show that the density estimates from large-scale text-to-image diffusion models like Stable Diffusion can be leveraged to perform zero-shot classification without any additional training. Our generative approach to classification, which we call Diffusion Classifier, attains strong results on a variety of benchmarks and outperforms alternative methods of extracting knowledge from diffusion models. Although a gap remains between generative and discriminative approaches on zero-shot recognition tasks, our diffusion-based approach has significantly stronger multimodal compositional reasoning ability than competing discriminative approaches.

Finally, we use Diffusion Classifier to extract standard classifiers from class-conditional diffusion models trained on ImageNet. Our models achieve strong classification performance using only weak augmentations and exhibit qualitatively better "effective robustness" to distribution shift. Overall, our results are a step toward using generative over discriminative models for downstream tasks.@inproceedings{li2023diffusion, title = {Your Diffusion Model is Secretly a Zero-Shot Classifier}, author = {Li, Alexander C. and Prabhudesai, Mihir and Duggal, Shivam and Brown, Ellis and Pathak, Deepak}, year = {2023}, booktitle = {ICCV}, pages = {2206-2217}, } - Internet Explorer: Targeted Representation Learning on the Open WebIn ICML, 2023

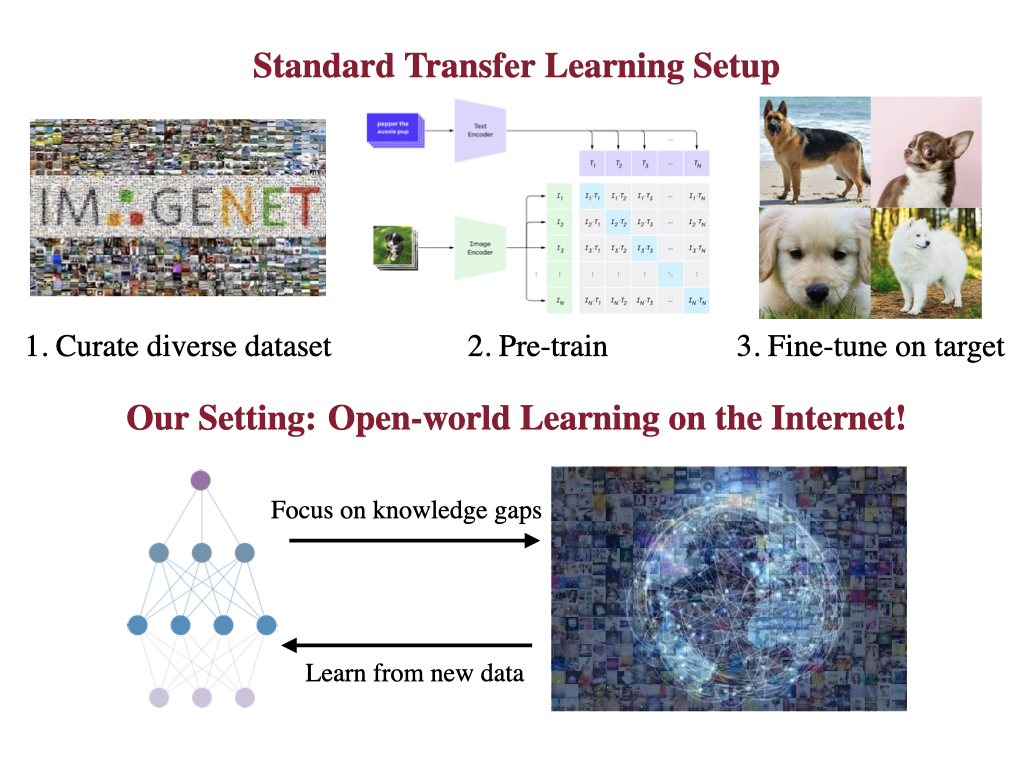

Vision models heavily rely on fine-tuning general-purpose models pre-trained on large, static datasets. These general-purpose models only understand knowledge within their pre-training datasets, which are tiny, out-of-date snapshots of the Internet—where billions of images are uploaded each day. We suggest an alternate approach: rather than hoping our static datasets transfer to our desired tasks after large-scale pre-training, we propose dynamically utilizing the Internet to quickly train a small-scale model that does extremely well on the task at hand. Our approach, called Internet Explorer, explores the web in a self-supervised manner to progressively find relevant examples that improve performance on a desired target dataset. It cycles between searching for images on the Internet with text queries, self-supervised training on downloaded images, determining which images were useful, and prioritizing what to search for next. We evaluate Internet Explorer across several datasets and show that it outperforms or matches CLIP oracle performance by using just a single GPU desktop to actively query the Internet for 30–40 hours.

@inproceedings{li2023internet, title = {Internet Explorer: Targeted Representation Learning on the Open Web}, author = {Li, Alexander C. and Brown, Ellis and Efros, Alexei A. and Pathak, Deepak}, year = {2023}, booktitle = {ICML}, }

2022

- Internet Curiosity: Directed Unsupervised Learning on Uncurated Internet DataIn ECCV Workshop on “Self Supervised Learning: What is Next?”, 2022

We show that a curiosity-driven computer vision algorithm can learn to efficiently query Internet text-to-image search engines for images that improve the model’s performance on a specified dataset. In contrast to typical self-supervised computer vision algorithms, which learn from static datasets, our model actively expands its training set with the most relevant images. First, we calculate the image-level curiosity reward as the negative distance of an image’s representation to its nearest neighbor in the targeted dataset. This reward is easily estimated using only unlabeled data from the targeted dataset, and can be aggregated into a query-level reward that effectively identifies useful queries. Second, we use text embedding similarity scores to propagate observed curiosity rewards to untried text queries. This efficiently identifies relevant semantic clusters without any need for class labels or label names from the targeted dataset. Our method significantly outperforms models that require 1-2 orders of magnitude more compute and data.

@inproceedings{li2022internetcuriosity, title = {Internet Curiosity: Directed Unsupervised Learning on Uncurated Internet Data}, author = {Li, Alexander C. and Brown, Ellis and Efros, Alexei A. and Pathak, Deepak}, year = {2022}, booktitle = {ECCV Workshop on ``Self Supervised Learning: What is Next?''}, address = {Tel Aviv, Israel}, }

2018

- An Architecture for Spatiotemporal Template-Based SearchEllis Brown, Soobeen Park, Noel Wardord, Adriane Seiffert, Kazuhiko Kawamura, Joseph Lappin , and Maithilee KundaAdvances in Cognitive Systems, 2018

Visual search for a spatiotemporal target occurs frequently in human experience—from military or aviation staff monitoring complex displays of multiple moving objects to daycare teachers monitoring a group of children on a playground for risky behaviors. In spatiotemporal search, unlike more traditional visual search tasks, the target cannot be identified from a single frame of visual experience; as the target is a spatiotemporal pattern that unfolds over time, detection of the target must also integrate information over time. We propose a new computational cognitive architecture used to model and understand human visual attention in the specific context of visual search for a spatiotemporal target. Results from a previous human participant study found that humans show interesting attentional capacity limitations in this type of search task. Our architecture, called the SpatioTemporal Template-based Search (STTS) architecture, solves the same search task from the study using a wide variety of parameterized mod els that each represent a different cognitive theory of visual attention from the psychological literature. We present results from initial computational experiments using STTS as a first step towards understanding the computational nature of attentional bottlenecks in this type of search task, and we discuss how continued STTS experiments will help determine which theoretical models best explain the capacity limitations shown by humans. We expect that results from this research will help refine the design of visual information displays to help human operators perform difficult, real-world monitoring tasks.

@article{brown2018stts, title = {An Architecture for Spatiotemporal Template-Based Search}, author = {Brown, Ellis and Park, Soobeen and Wardord, Noel and Seiffert, Adriane and Kawamura, Kazuhiko and Lappin, Joseph and Kunda, Maithilee}, year = {2018}, journal = {Advances in Cognitive Systems}, volume = {6}, pages = {101--118}, }

Talks

2021

- Linearly Constrained Separable OptimizationEllis Brown, Nicholas Moehle, and Mykel J. KochenderferIn JuliaCon 2021 JuMP Track, Jul 2021

Many optimization problems involve minimizing a sum of univariate functions, each with a different variable, subject to coupling constraints. We present PiecewiseQuadratics.jl and SeparableOptimization.jl, two Julia packages for solving such problems when these univariate functions in the objective are piecewise-quadratic.

2019

- Modeling Uncertainty in Bayesian Neural Networks with Dropout: The effect of weight prior and network architecture selectionEllis Brown*, Melanie Manko*, and Ethan Matlin*In American Indian Science and Engineering Society National Conference, Oct 2019

Third Place, Graduate Student Research Competition

While neural networks are quite successful at making predictions, these predictions are usually point estimates lacking any notion of uncertainty. However, when fed data very different from its training data, it is useful for a neural network to realize that its predictions could very well be wrong and encode that information through uncertainty bands around its point estimate prediction. Bayesian Neural Networks trained with Dropout are a natural way of modeling this uncertainty with theoretical foundations relating them to Variational Inference approximating Gaussian Process posteriors. In this paper, we investigate the effects of weight prior selection and network architecture on uncertainty estimates derived from Dropout Bayesian Neural Networks.

2017

- Computational Cognitive Systems to Model Information SalienceEllis Brown, Adriane Seiffert, Noel Warford, Soobeen Park , and Maithilee KundaIn American Indian Science and Engineering Society National Conference, Sep 2017

This project seeks to model human information salience—in this case, how much a person will notice a piece of visual information—using an approach from artificial intelligence that involves building computational cognitive systems models of human performance on certain tasks. Too much visual information can be overwhelming, making it difficult for people to discern the important parts. This can be detrimental in many situations where visual attention is crucial, such as air traffic control systems, military information displays, or TSA screening stations. Results from a previous human participant study found that humans show interesting limitations in attentional capacity when asked to monitor a complex moving display. We create artificial computational agents based on various cognitive models of visual attention to solve the same visual information salience task and run computational experiments to measure cognitive performance. In follow-up work, we will compare the performance of our models to the human data to determine which models best explain the capacity limitations shown by people.